We’ve all been there: you’re at the most intense moment of a movie or a live event, and suddenly, the picture freezes. That spinning wheel of death appears. It’s a universally frustrating sight, but what we call video buffering is really just a data flow problem—one that can be solved.

Buffering happens when your device plays video faster than it can download the next chunk of it. Think of it as an assembly line. As long as new parts arrive just before they’re needed, everything runs smoothly. But if the supply line gets held up, the whole operation grinds to a halt until the next shipment comes in. That pause is buffering.

This guide will demystify that spinning wheel, turning a vague annoyance into an understandable challenge with clear, practical solutions.

Why That Spinning Wheel Haunts Your Streams

Your video stream is on an incredible journey, traveling from a server that could be thousands of miles away, through a maze of networks, all the way to your screen. A lot can go wrong on that trip.

We’re going to break down the entire delivery chain, piece by piece, to understand where these “unscheduled stops” happen. Knowing the why behind buffering is the first, most critical step to actually fixing it.

From Server to Screen: The Data Journey

A video’s path is filled with potential bottlenecks, and each one is a chance for that dreaded spinning icon to pop up. Let’s look at the most common points of failure.

- Viewer-Side Issues: Sometimes the problem is right in the room. A slow Wi-Fi connection, too many devices hogging bandwidth on the local network, or even an older device that just can’t keep up with processing the video data can all cause buffering.

- Network Congestion: This is the digital equivalent of a traffic jam. It could be the “last mile” where your Internet Service Provider (ISP) is struggling to deliver data, or it could be broader congestion across the internet’s backbone.

- Provider Infrastructure: The issue can also be at the source. If the streaming provider’s servers are overloaded with too many viewers at once, they might not be able to send out the video data fast enough to keep everyone’s stream running smoothly.

By isolating each stage of the journey, you can stop guessing and start diagnosing where the real slowdown is happening.

At its core, the principle is simple: for a video to play without interruption, the download speed must stay ahead of the playback speed. When that balance tips the wrong way, buffering is what you get.

Don’t worry, this isn’t a problem without a solution. We’ll soon get into the powerful tools modern streaming platforms use to keep things running smoothly. Technologies like Adaptive Bitrate (ABR) streaming and Content Delivery Networks (CDNs) are specifically designed to anticipate and route around these digital traffic jams, ensuring a steady, reliable viewing experience for your audience.

How Video Streaming Actually Works

To really get a handle on buffering, you first need to understand the wild journey a video takes to get from a server to your screen. It’s nothing like downloading a single file. Instead, streaming is a constant, high-speed delivery of tiny data pieces, called packets, that your device stitches together just in time for you to watch.

This whole process is a minor miracle of engineering, built to give you a smooth experience even when your internet connection is having a bad day. It all comes down to a delicate dance between download speed and playback speed, managed by a small but mighty component on your device: the video buffer.

The Role of the Video Buffer

Think of the video buffer as a small, temporary holding pen on your device. It’s a waiting room where the next few seconds of video hang out before they appear on screen. Its job is to pre-load a little bit of content, creating a safety cushion.

This cushion is everything. It ensures that even with small network hiccups or a temporary dip in speed, your video keeps rolling without a hitch by playing from this local stockpile. Your player isn’t waiting on every single packet to arrive from across the internet; it’s just grabbing the next piece from the buffer right next door.

The main idea is simple: as long as new data fills the buffer faster than your player drains it, your video plays smoothly. Streaming video buffering is what happens the moment that buffer runs dry.

When the buffer is empty, the video player has no choice but to hit pause and show you that dreaded spinning wheel. It’s waiting for the “delivery truck”—your internet connection—to show up and refill the empty waiting room. We experience that pause as buffering, and it’s a killer for engagement. In fact, one study found that when buffering lasts just 2-3 seconds, viewer abandonment skyrockets.

From A Single File to Tiny Chunks

The video you’re watching didn’t start as a stream. It began its life as one massive digital file. Before it can be sent to you, that file has to be broken down into smaller, more manageable pieces, or “chunks.”

This involves a few critical steps:

- Encoding and Compression: The original video is squeezed down to a smaller file size, making it much easier to send over the internet.

- Transcoding: That compressed video is then converted into several different versions, each with a different quality and bitrate. Getting a good grasp on what video transcoding is is crucial to understanding how modern streaming adapts on the fly.

- Segmentation: Finally, each of those versions is sliced into small segments, usually just a few seconds long each.

These segments are the individual pieces that get sent to your device. This method is the secret sauce behind features like Adaptive Bitrate Streaming, allowing the player to switch between different quality segments based on what your network can handle at that exact moment.

The Step-by-Step Streaming Process

So, let’s trace the journey from the moment you hit “play” to the video appearing on your screen. This sequence is happening over and over, non-stop, in the background.

- Initial Request: Your device pings the streaming server, asking to start the video.

- Manifest File Delivery: The server’s first move is to send back a “manifest file.” Think of it as a table of contents; it tells your video player about all the available video segments and their different quality levels.

- First Segment Download: Your player reads the manifest, quickly checks your current internet speed, and requests the first few segments at the right quality.

- Filling the Buffer: These first few segments land in the video buffer, building up that all-important playback cushion.

- Playback Begins: As soon as the buffer has a few seconds of video ready to go, the player starts the show.

- Continuous Monitoring: While the video is playing, your device is constantly downloading the next segments to keep the buffer topped up. At the same time, it’s keeping an eye on your internet connection, ready to switch to a higher or lower quality stream if needed.

Diagnosing the Common Causes of Buffering

That frustrating spinning wheel is more than just an annoyance; it’s a symptom. Think of streaming video buffering like a check engine light in your car—it tells you something’s wrong, but it doesn’t pinpoint the exact problem. The culprit could be in the viewer’s living room, at a server farm across the country, or somewhere in the complex digital web connecting the two.

To actually fix buffering, you have to play detective. The breakdown almost always happens in one of three places: the viewer’s local setup, the wider network, or the streaming provider’s own infrastructure. Nailing down the source is the single most important step toward finding a real solution.

Problems Originating from the Viewer’s End

More often than not, the source of buffering is surprisingly close to home. The equipment and network conditions inside a viewer’s house are common culprits, creating a bottleneck right at the last second of the video’s journey.

These local issues are tricky because they’re unique to each user, making them a real headache for a streaming service to diagnose from afar.

Several factors can come into play on the viewer’s side:

- Congested Home Wi-Fi: Your Wi-Fi router is like a busy intersection. If too many devices—laptops, phones, smart TVs, and game consoles—are all trying to get through at once, you get a traffic jam. This digital gridlock slows everything down, and the video player simply can’t get the data it needs fast enough.

- Outdated Hardware: An old streaming device, smart TV, or computer might not have the muscle to handle modern, high-bitrate video. In these cases, the device itself becomes the weak link, struggling to decode and play the stream smoothly even with a lightning-fast internet connection.

- Background Bandwidth Hogs: Other apps running on the same device or network can be silent bandwidth thieves. A big file download, an automatic software update, or someone else in the house streaming in 4K can gobble up all the available capacity, leaving your video to stutter and buffer.

The good news is that these are often the easiest problems for a viewer to fix themselves. A simple device restart or a quick check for other network activity can sometimes work wonders.

Network and ISP Bottlenecks

Sometimes the problem isn’t in the house, but it isn’t at the streaming service’s end either. It’s stuck in the middle ground—the vast, complicated network of cables and routers that make up the internet.

Your Internet Service Provider (ISP) is a major player here. The connection from their central hub to your home, often called the “last mile,” can be a significant bottleneck, especially during peak evening hours when everyone in the neighborhood is online.

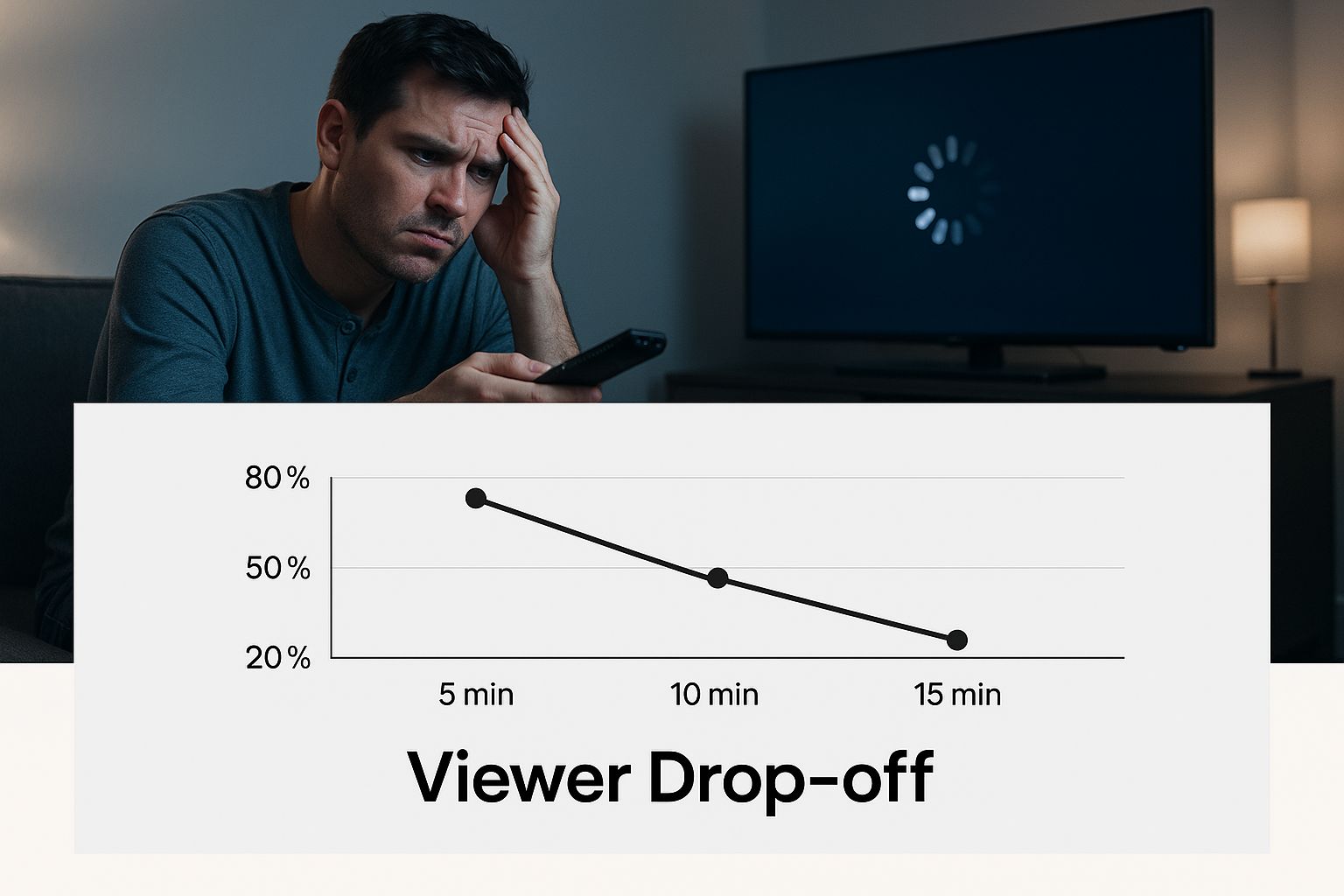

Buffering isn’t just a minor inconvenience; it’s a direct threat to keeping your audience. Even a few seconds of waiting can be the difference between a happy viewer and a lost one.

This infographic paints a clear picture of just how quickly viewers abandon a stream when buffering strikes.

As you can see, viewer patience wears thin almost immediately. The financial hit from buffering is real and significant. Research shows that if a stream buffers for just 2-3 seconds, 28% of viewers will click away. If that delay stretches to 5 seconds or more, you’ve lost a staggering 65% of your audience. You can dive deeper into the data on how buffering impacts viewer engagement by reviewing the full research on vodlix.com.

Issues at the Streaming Provider Level

Finally, the problem might start at the source. A viewer can have a perfect home setup and a flawless internet connection, but if the streaming provider’s infrastructure isn’t ready for primetime, buffering is inevitable.

These server-side issues are often the most frustrating because they are completely out of the viewer’s hands. When they happen, they usually affect a large number of users at once, which is a clear sign of a systemic problem.

Common issues on the provider’s end include:

- Overloaded Servers: When a live event goes viral or more users log on than expected, the origin servers can get slammed. They just can’t keep up with the demand, causing widespread buffering for everyone trying to watch.

- Inefficient Content Delivery: A service that relies on a single data center or a poorly configured Content Delivery Network (CDN) will always struggle to serve a global audience. Viewers far away from the server will experience high latency, meaning the data takes too long to arrive—a direct cause of buffering.

To help you get a clearer picture, it’s useful to organize these potential issues by where they originate.

Common Sources of Streaming Video Buffering

The table below breaks down the most frequent causes of buffering and categorizes them by where the problem lies in the streaming chain.

| Origin of Issue | Specific Cause | Brief Explanation |

|---|---|---|

| Viewer’s Setup | Slow or Congested Wi-Fi | Too many devices on one network are fighting for bandwidth, slowing down video data. |

| Viewer’s Setup | Outdated Device or Browser | The hardware or software can’t process modern, high-quality video streams efficiently. |

| Network Connection | ISP Throttling or Congestion | The Internet Service Provider might slow down speeds or face heavy traffic in the area. |

| Network Connection | Poor “Last-Mile” Connectivity | The physical connection from the ISP to the home is slow or unreliable, creating a bottleneck. |

| Provider’s Side | Overloaded Origin Servers | A surge in viewers overwhelms the provider’s servers, so they can’t send data fast enough. |

| Provider’s Side | Poor CDN Integration | The Content Delivery Network isn’t distributing the stream effectively, causing delays for distant viewers. |

By understanding these distinct categories, you can start to systematically diagnose and address the root cause of buffering, rather than just treating the symptom.

Using Adaptive Bitrate Streaming to Beat Buffering

Ever notice how a video keeps playing on your phone even when you walk through a spot with terrible reception? That’s not an accident. The single most powerful weapon we have against that dreaded streaming video buffering wheel is a technology called Adaptive Bitrate Streaming (ABR).

Think of ABR as an incredibly smart traffic cop for your video stream. Instead of trying to shove one massive, high-quality video file down a congested internet connection, ABR breaks it into multiple, smaller versions. When it senses a traffic jam (your network slowing down), it instantly reroutes the stream to a lower-quality version to keep things moving. This switch prioritizes a continuous, uninterrupted experience over perfect, pixel-for-pixel quality at all times.

How ABR Creates a Flawless Experience

The real work of ABR happens long before you ever press play. A single, high-quality source video is run through an encoder that creates several different versions, often called “renditions.”

Each rendition has a different bitrate, which is just a measure of its quality and size. A stunning 4K stream will have a very high bitrate, while a basic 360p version will have a much lower one.

Here’s a quick look at how it all comes together:

- Create the Renditions: The original video is encoded into a “bitrate ladder”—a full range of quality options, from crystal-clear 4K down to a mobile-friendly 360p.

- Chop It Up: Each of these renditions is then sliced into small, manageable segments, usually just a few seconds long.

- The Player Takes Control: The video player on your device acts as the brain of the operation. It’s constantly monitoring your network conditions, checking things like available bandwidth and latency.

- Switch on the Fly: Before it requests the next video segment, the player makes a split-second decision. If your connection is blazing fast, it grabs a high-bitrate segment for the best possible quality. If your connection suddenly drops, it seamlessly requests a lower-bitrate segment to make sure you don’t run out of video data and hit a buffer.

This constant, segment-by-segment adjustment is completely invisible to you. All you see is a video that just works, even if the picture quality subtly shifts to match your connection speed.

The Real-World Impact of ABR

The magic of ABR is that it’s proactive. It doesn’t wait for your video to freeze before it acts; it anticipates network issues and course-corrects ahead of time. This is exactly why you can start watching a movie on your home Wi-Fi, walk out the door, and have it continue playing on your cellular network without a jarring interruption. Your player simply switches to a rendition better suited for the new, slower connection.

Adaptive Bitrate Streaming is the primary reason modern streaming services can deliver a consistent viewing experience across a massive range of devices and network conditions. It trades moments of perfect quality for an uninterrupted playback journey.

Without ABR, we’d be stuck with a terrible choice: stream a high-quality video that buffers constantly for anyone without a perfect connection, or stream a low-quality video that disappoints everyone with fast internet. ABR gets rid of that compromise by delivering the best possible quality for each person’s unique situation. To dig deeper into the nuts and bolts, you can learn more about the mechanics of adaptive bitrate streaming in our detailed guide.

Key Benefits of Implementing ABR

For anyone delivering video content, using ABR isn’t just a nice feature—it’s a fundamental business decision that directly impacts viewer happiness and retention. The upsides are immediate and clear.

- Drastically Reduced Buffering: This is the big one. By automatically adjusting to network hiccups, ABR slashes those frustrating pauses that cause viewers to leave.

- A Better User Experience (UX): A smooth, reliable stream keeps people watching longer and makes them more likely to come back. Happy viewers are loyal viewers.

- A Much Wider Audience: ABR ensures your content is watchable for people with slower or less stable internet, including those on mobile networks or in areas with poor infrastructure.

- Faster Video Start Times: The player can kick things off by loading a low-quality segment first, getting the video on-screen almost instantly. It then ramps up to higher quality as it downloads more data. That fast start is critical—research shows that delays of just a few seconds can cause a huge number of viewers to simply give up and click away.

How CDNs Deliver a Faster Streaming Experience

If Adaptive Bitrate (ABR) is the smart driver adjusting for traffic on the final mile to your house, then a Content Delivery Network (CDN) is the superhighway system that gets the video into your city in the first place. A CDN is a massive, globally distributed network of servers built to tackle one of the biggest bottlenecks in streaming: physical distance.

Distance is a performance killer. If your main streaming server is in California, a viewer in London has to pull that data across an entire continent and an ocean. That trip introduces latency—the technical term for travel delay—which is a primary cause of streaming video buffering. A CDN’s entire purpose is to make that trip drastically shorter.

Bringing Content Closer to the Viewer

Here’s how it works: a CDN caches, or stores copies, of your video on servers strategically placed all over the globe. These servers are called Points of Presence (PoPs). When a viewer in London presses play, their request doesn’t go all the way to California. Instead, it’s automatically routed to the nearest PoP, maybe one just a few miles down the road.

Think of it like ordering a pizza. You wouldn’t call the national headquarters in another state; you call the local shop because it’s closer and can get it to your door in minutes. By slashing the physical distance data has to travel, CDNs give you a massive performance boost right out of the gate.

This isn’t just theoretical. The industry’s investment in CDNs is paying off. Between 2023 and 2025, worldwide buffering times dropped by 18%, and video start times got 12% faster. You can find more details on these global streaming quality gains on senalnews.com.

The Core Benefits of Using a CDN

Plugging a CDN into your streaming workflow does more than just make things faster. It builds a more resilient and scalable delivery system, which is non-negotiable for reaching large audiences with high-quality video.

- Less Latency and Buffering: Serving video from a nearby server cuts down the data’s round-trip time, which directly reduces buffering and gets your video playing faster.

- Lower Origin Server Load: The CDN’s distributed army of servers handles the vast majority of viewer requests. This protects your main “origin” server from getting hammered, especially during a big live event.

- Massive Scalability: A CDN lets you serve a huge, global audience without having to build your own worldwide network of data centers. It can absorb sudden traffic spikes without breaking a sweat.

A Content Delivery Network fundamentally changes the delivery model from a one-to-many system to a many-to-many system. It decentralizes content delivery, making the entire streaming ecosystem faster, more reliable, and better equipped to handle a global audience.

ABR and CDNs: The Perfect Partnership

While both ABR and CDNs are fantastic weapons in the fight against buffering, they solve different parts of the puzzle. ABR is focused on the viewer’s local connection—the “last mile”—while a CDN optimizes the “middle mile” of the internet, ensuring data gets to that local network as fast as possible.

When you use them together, they create a nearly bulletproof delivery system. The CDN gets the video segments to the viewer’s doorstep with lightning speed, and ABR makes sure the player is asking for the perfectly-sized segment for that viewer’s specific connection. It’s a two-pronged attack that tackles the biggest variables in streaming. For a deeper dive, check out our complete guide on using a CDN for video streaming and see how this powerful combo has become the gold standard for any serious streaming service.

The Future of Seamless Streaming

The battle against the dreaded spinning wheel is getting a lot smarter. While Adaptive Bitrate Streaming and CDNs have given us a solid foundation for today’s streaming, the next big leap is all about making video buffering a genuine relic of the past. The whole game is shifting toward making video data smaller, faster, and more resilient.

One of the most exciting frontiers is in video compression. New technologies are finding clever ways to shrink file sizes without turning your video into a pixelated mess. This means high-quality video can finally flow smoothly, even when your network is having a bad day. And right at the center of this evolution is artificial intelligence.

Smarter Compression with AI and Advanced Codecs

Artificial intelligence is no longer just a buzzword; it’s a core part of modern video processing that tackles the buffering problem at its source. Instead of using a blunt, one-size-fits-all approach, AI-powered compression can analyze a video frame by frame, making intelligent decisions on how to trim the data with the least possible impact on what you see.

This smarter approach gets a massive boost from advanced video codecs like AV1. A codec is simply the engine that compresses and decompresses video, and AV1 is miles ahead of its older cousins in terms of efficiency. When you put these two innovations together, the results are pretty impressive:

- AI-Driven Compression: Think of it like a master artist. Neural networks study the video content and apply the perfect compression techniques to each scene, keeping the quality high while slashing the data footprint.

- GPU-Accelerated Encoding: By using the raw power of graphics processors, the entire encoding process gets a speed boost, making it possible to create these highly optimized streams on the fly.

With the demand for video growing every day, the pressure is on to handle streaming loads without a hitch. Platforms that bake these AI-driven workflows into their systems are the ones that will win, delivering buffer-free streams even when a viewer’s connection is less than ideal. You can get more details on the future demands of video streaming on gomomento.com.

New Frontiers in Delivery Infrastructure

It’s not just about making the files smaller; we’re also building faster and more reliable highways to deliver them. The rollout of 5G networks is a massive piece of this puzzle, promising incredibly low latency and huge bandwidth right to our phones—which have always been a tricky spot for high-quality streaming.

The next era of streaming isn’t about a single magic bullet. It’s about a multi-layered strategy where smarter files travel over faster, more decentralized networks to create a profoundly more reliable experience.

The infrastructure itself is getting a brain transplant, too. Instead of just relying on a single CDN, many major platforms are now using a multi-CDN strategy. This lets them intelligently switch traffic between different networks in real time, always choosing the best-performing route for each viewer. It adds a crucial layer of redundancy and resilience.

Even more interesting, some services are exploring peer-to-peer (P2P) delivery. In a P2P setup, viewers watching the same stream actually share small pieces of the video with each other. This takes a huge load off the central servers and builds a decentralized delivery network that actually gets stronger and more efficient as more people watch. All of these advancements point to a future dedicated to one simple goal: keeping the content flowing, no matter what.

Got Questions About Buffering? We’ve Got Answers.

Let’s tackle some of the most common questions people have about video buffering. Think of this as your quick-start guide to understanding the practical side of keeping your streams running smoothly.

Is It Possible to Get Rid of Buffering Completely?

In a perfect world, yes. In reality, it’s next to impossible to guarantee a buffer-free stream for every single person, every single time. There are just too many variables, like a viewer’s sudden drop in Wi-Fi signal, that are completely out of your control.

The goal isn’t perfection, but resilience. By using smart tools like Adaptive Bitrate Streaming (ABR) and a solid Content Delivery Network (CDN), you can dramatically cut down on buffering incidents. These technologies work together to make your stream flexible, so it can adjust on the fly to a viewer’s connection instead of just stopping cold.

Does 4K Video Cause More Buffering?

Absolutely. Higher quality means more data, and a 4K stream is a data hog. It can easily demand four times more bandwidth than a standard 1080p stream, which puts a lot of pressure on the viewer’s internet connection.

This is exactly where ABR becomes your best friend. If a viewer’s network can’t handle the full 4K stream, the player automatically drops down to 1080p or 720p. This quick switch keeps the video playing, which is far better than a beautiful, crisp image that’s frozen on screen.

A choppy stream at a lower resolution is always better than a stalled stream at perfect 4K. ABR makes that smart trade-off for you, ensuring your audience stays locked in, even if their connection takes a temporary hit.

How Can I Tell if Buffering Is My Problem or the Streaming Service’s?

It’s the classic “is it me or you?” problem. To figure it out, start by checking your own setup. Run an internet speed test to make sure you’re actually getting the bandwidth you pay for.

Next, try watching on a different device or network. If the video plays fine on your phone using cellular data but constantly buffers on your TV over Wi-Fi, the issue is probably with your home network. If it buffers everywhere you try to watch, the problem likely lies with the streaming provider’s servers or CDN.

Ready to build a streaming platform that puts buffering in the rearview mirror? LiveAPI gives you the tools you need, from built-in ABR to powerful CDN integrations, so you can deliver a rock-solid viewing experience. Start building a better stream at https://liveapi.com.