Ever heard of Adaptive Bitrate (ABR) streaming? It’s a game-changer in video delivery. In simple terms, it’s a clever technique that adjusts the quality of a video you’re watching in real-time based on your internet connection.

Think of it this way: when your Wi-Fi is struggling, ABR automatically dials down the video quality to keep it playing without freezing. But the moment your connection speeds up, it shifts right back into beautiful high-definition. This constant, seamless adjustment is what finally kills that dreaded buffering wheel.

How Adaptive Bitrate Streaming Ends Buffering

We’ve all been there—the video freezes right at the most intense moment. That spinning circle isn’t just an irritation; it’s a sign that the video delivery system has failed. The root cause is almost always a mismatch between the video file’s size (its bitrate) and your network’s ability to download it fast enough.

Before ABR became the industry standard, streaming was a “one-size-fits-all” headache. A single, high-quality video file was sent out to everyone, no matter their connection speed or device. If your internet was having a bad day, the video player just couldn’t keep up, causing those frustrating stops and starts. This was a massive problem for viewers everywhere.

The Modern Solution to an Old Problem

Adaptive bitrate streaming flips the script entirely. Instead of one file, it creates multiple versions of the same video, each at a different quality level—from a grainy low-resolution version all the way up to crisp 4K.

Your video player then acts like an intelligent traffic controller. It constantly monitors your internet connection and, if it detects a slowdown, it seamlessly switches to a lower-quality stream to keep things moving. Once your bandwidth opens up again, it smartly switches back to a higher-quality version for the best picture.

This technology is now the engine behind modern video. It’s no surprise that ABR has been so widely adopted—it directly fixes one of the biggest frustrations in online viewing. In fact, adaptive bitrate streaming now powers over half of the world’s mobile data traffic, which shows just how essential it has become. You can learn more about the common causes of buffering when streaming in our detailed guide.

ABR is not just about avoiding buffering; it’s about delivering the best possible viewing experience a user’s connection can support at any given moment.

By dynamically adjusting to network changes, ABR ensures viewers stay glued to the screen and content plays without a hitch. It turns a potentially frustrating experience into a completely smooth and seamless one.

The Evolution of Modern Streaming Technology

To really get why adaptive bitrate streaming feels so seamless today, it helps to remember just how painful online video used to be. In the early days, you had to download an entire video file before you could even hit play. On the screechy dial-up connections of the time, this was an exercise in patience.

Things got a bit better with progressive downloads, which let you start watching while the rest of the file downloaded in the background. But it was still a fragile, single-file system. The moment your connection hiccuped, you were staring at the dreaded buffering wheel, completely killing the experience.

The Rise and Fall of Proprietary Protocols

For a long time, watching a video online meant dealing with a maze of proprietary plugins. Technologies like RealPlayer, Windows Media Player, and eventually Adobe Flash all required users to install separate software just to watch something. It was a messy, fragmented world where compatibility was a constant headache for everyone.

Adobe Flash, in particular, grew to dominate online video. But its plugin-based approach started showing serious cracks as the web went mobile. It was a resource hog, drained batteries, and posed security risks, all of which set the stage for a massive change.

The entire landscape shifted in the mid-2000s. YouTube launched in 2005, followed by Twitch (then justin.tv) in 2006, and Netflix’s streaming service in 2007. This explosion of on-demand content put a huge strain on the old delivery methods. The final nail in the coffin came in 2010 when Apple publicly blocked Flash from its iOS devices. That move effectively marked the end of an era and forced the industry to find a new, open standard. You can dive deeper into the history of streaming protocols to see how it all unfolded.

A New Era Based on HTTP

The answer was hiding in plain sight: HTTP (Hypertext Transfer Protocol). This is the very same protocol your browser uses to load websites, images, and text. By building streaming on top of this universal foundation, developers could finally ditch the clunky plugins for good.

HTTP-based streaming meant video could be delivered using the same web server infrastructure that already powered the rest of the internet, dramatically simplifying deployment and improving accessibility.

This shift was about more than just solving the plugin problem. It paved the way for the intelligent, responsive systems we have now. By chopping video into small, manageable chunks and delivering them over standard web protocols, the door was opened for video players to get a whole lot smarter. They could now request different segments based on real-time network conditions—the core innovation that unlocked adaptive bitrate streaming.

Breaking Down the Adaptive Streaming Process

So, how does adaptive bitrate streaming pull off that magic trick of a perfectly smooth, buffer-free video? It’s not one single action but a clever, three-step process working tirelessly behind the scenes. This system turns a single video file into a flexible stream ready for just about any network condition you can throw at it.

A good way to think about it is like a professional kitchen gearing up for a dinner rush. Instead of cooking one massive pot of stew, the chefs prepare the same dish in various portion sizes—small, medium, and large. That way, they can instantly serve the right-sized meal to each customer based on their appetite. ABR works on a similar principle, just with data instead of food.

Step 1: Encoding and Transcoding the Master File

It all starts with a single, high-quality master video file. This source file gets fed into an encoder, which kicks off a crucial process called transcoding. In this stage, that one master file is duplicated and converted into multiple, separate versions, often called “renditions.”

Each of these renditions is created with a different bitrate and resolution. For instance, a single 4K video might be transcoded into a whole ladder of distinct versions:

- 1080p (Full HD): The top-tier version for viewers on a strong Wi-Fi or wired connection.

- 720p (HD): A solid middle-of-the-road option for stable mobile connections.

- 480p (Standard Definition): A lower-quality version perfect for weaker or fluctuating signals.

- 360p (Low Resolution): A lightweight option to keep the video playing even when a connection is barely there.

This initial prep work is the foundation for everything that follows. If you’re curious about the nitty-gritty of this conversion, our guide explaining what is video transcoding dives much deeper. Without this menu of pre-made quality levels, the player would simply have nothing to switch between.

Step 2: Segmenting the Video into Chunks

With all the different renditions created, the next step is segmentation. Each video version—from the crisp 1080p stream all the way down to the 360p one—is sliced into small, manageable pieces, or “chunks.” These chunks are usually just a few seconds long.

Crucially, every chunk gets a sequence number, and all the renditions are sliced at the exact same time intervals. This means chunk #25 of the 1080p stream corresponds to the exact same moment in the video as chunk #25 of the 360p stream. This perfect alignment is what lets the video player jump between quality levels without you ever noticing a skip.

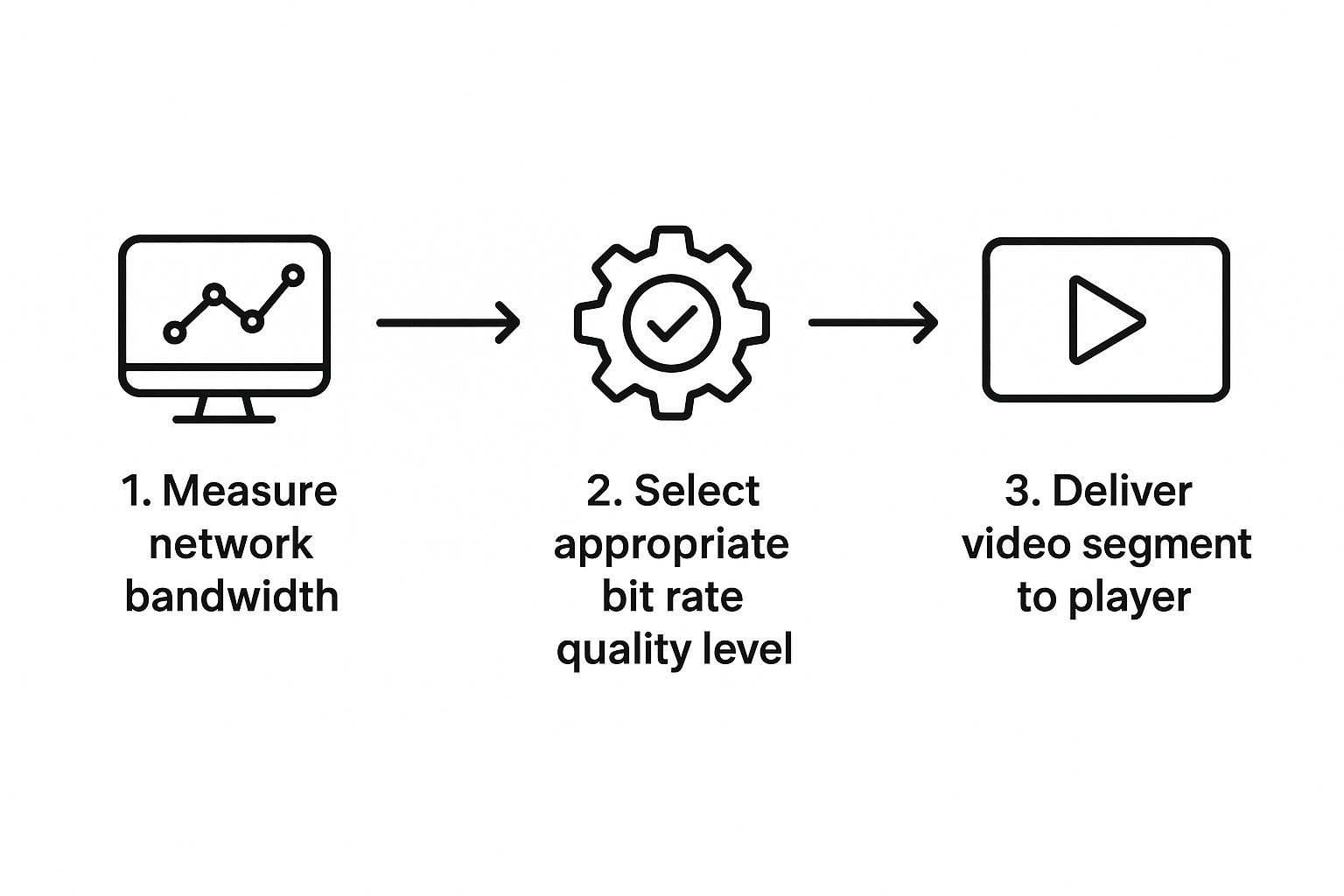

Step 3: Delivering the Right Chunk at the Right Time

This final step is where the intelligence of the system really comes into play. When you hit play, your video player doesn’t just start downloading the entire file. It instantly becomes a smart, dynamic decision-maker.

The infographic below neatly illustrates the logic the player follows, moment by moment.

As you can see, the player is constantly checking your network speed to request the best possible video chunk, creating that seamless viewing experience we all expect.

Here’s a simple breakdown of what’s happening on your device:

- It Measures: The player first takes a reading of your current internet bandwidth. Is it fast? Slow? Fluctuating?

- It Chooses: Based on that reading, it selects the highest-quality chunk it can confidently download before it’s time to play it.

- It Requests: It sends a request to the server asking for that specific chunk.

- It Repeats: Before the current chunk even finishes playing, the player is already re-evaluating the network and requesting the next chunk, adjusting the quality up or down as needed.

This constant cycle of measuring, choosing, and requesting is the very heart of adaptive bitrate streaming. It ensures the video just keeps playing, delivering the best quality your connection can handle at any given second.

HLS vs. MPEG-DASH: A Tale of Two Streaming Protocols

While adaptive bitrate streaming is the core concept, the way it’s actually implemented comes down to specific protocols. Think of them as different shipping companies—both get your package where it needs to go, but they use their own set of rules, labels, and trucks. In video streaming, the two heavyweights are Apple’s HLS and the open-standard MPEG-DASH.

Getting a handle on their differences is crucial for anyone building streaming experiences. The protocol you choose impacts everything from which devices can play your video to how much delay your viewers experience.

The Powerhouse Behind Apple: HLS

HLS (HTTP Live Streaming) was born out of necessity. Apple developed it back in 2009 to get video streaming smoothly on iPhones, which famously didn’t support the dominant Adobe Flash technology of the time. That origin story explains its greatest strength: it just works across the entire Apple ecosystem.

If you’re watching a video on an iPhone, iPad, Apple TV, or a Mac, you’re almost certainly using HLS. This native support makes it the path of least resistance for reaching Apple users, as no special plugins or player tweaks are needed. HLS works by chopping the video into small .ts (Transport Stream) files and creating an M3U8 playlist file that tells the player which chunk to grab next. To really dig into the mechanics, check out our complete guide on what is HLS streaming.

The Flexible Open Standard: MPEG-DASH

On the other side, we have MPEG-DASH (Dynamic Adaptive Streaming over HTTP). Unlike HLS, which started as an Apple-specific solution, DASH was designed from the ground up by the Moving Picture Experts Group (MPEG) to be a universal, open standard. The goal was to create one protocol to rule them all and end the fragmentation in the streaming world.

DASH’s superpower is its flexibility. It’s completely codec-agnostic, meaning it doesn’t care if your video is encoded in H.264, H.265, VP9, or the latest AV1 format. It uses a manifest file called an MPD (Media Presentation Description) to point the player to all the available video and audio chunks. This adaptability has made it a favorite for Android, smart TVs, and just about every modern web browser.

While HLS gained massive traction through its tight integration with Apple’s ecosystem, MPEG-DASH emerged as the industry’s answer for a unified, vendor-neutral standard for adaptive bitrate streaming.

So, how do these two industry titans really compare? Each has its own strengths and weaknesses that become clear when you see them side-by-side.

HLS vs MPEG-DASH Protocol Comparison

This table breaks down the core differences between the two leading adaptive bitrate streaming protocols.

| Feature | HLS (HTTP Live Streaming) | MPEG-DASH (Dynamic Adaptive Streaming over HTTP) |

|---|---|---|

| Primary Developer | Apple | MPEG (International Standards Organization) |

| Primary Support | iOS, macOS, iPadOS, Safari, Apple TV | Android, Chrome, Firefox, Smart TVs, Game Consoles |

| Manifest File | .m3u8 (Playlist) |

.mpd (Media Presentation Description) |

| Codec Support | Primarily H.264 and H.265 (HEVC) | Codec-agnostic (supports H.264, VP9, AV1, etc.) |

| Latency | Traditionally higher, but improving with LL-HLS | Generally offers lower latency out of the box |

| Adoption | Over 70% of the streaming market share | Growing steadily, especially in web and Android |

In the end, your choice often boils down to your audience. If you’re targeting Apple users above all else, HLS offers a seamless, native experience. But for reaching the widest possible audience across the web, Android, and smart TVs, the open nature and powerful flexibility of MPEG-DASH make it an incredibly strong choice for modern streaming.

The Future of Streaming with AI and Machine Learning

Standard adaptive bitrate streaming is already pretty slick, but the next big leap is being driven by artificial intelligence and machine learning. Today’s ABR systems are mostly reactive. They wait for your internet connection to slow down and then switch to a lower-quality stream. The future is all about being predictive.

Imagine a system that analyzes huge pools of data—things like network history, what time it is, and even the type of video you’re watching—to guess when your connection might dip before it actually happens. This lets the video player switch to a more stable bitrate ahead of time, completely avoiding those annoying quality drops or the spinning buffer wheel. It’s like the difference between a car that jolts after hitting a pothole and one that sees it coming and glides around it.

Building Intelligent Streaming Clients

This move from reactive to predictive streaming is happening thanks to self-learning algorithms built right into the video player. These smart clients go way beyond just measuring your current bandwidth; they start to learn from their surroundings to make better choices in real-time.

This kind of forward-thinking approach is already making the Quality of Experience (QoE) better for viewers. Self-learning clients often use algorithms like Q-learning to pick the right video quality based on network throughput and how full the buffer is, making the whole system more efficient. You can get into the nitty-gritty of how self-learning improves adaptive bitrate streaming and what it means for the end-user.

These systems also introduce a sense of fairness into the equation.

AI-driven ABR can balance stream quality for multiple people on the same Wi-Fi network. So instead of one person’s 4K stream hogging all the bandwidth while another person’s video buffers endlessly, the system can distribute the resources more fairly so everyone has a good time.

Optimizing Content Delivery with AI

AI isn’t just making the player smarter; it’s also changing how video is prepped and delivered from the server. Machine learning models can look at a video scene-by-scene and apply the most efficient encoding settings—a technique called content-aware encoding.

Think about it: a high-octane action sequence needs a much higher bitrate to look sharp than a slow scene of two people talking. An AI can make these calls for every single frame, which often leads to a smaller overall file size without anyone noticing a drop in quality. This gives content providers some huge wins:

- Reduced Storage Costs: Smaller video files directly translate to lower cloud storage bills.

- Faster Start Times: Lighter files are delivered quicker, so the video starts playing almost instantly.

- Improved ABR Performance: When the different quality levels are encoded more efficiently, the player can switch between them more smoothly.

As these AI-powered tools become the new standard, the ABR systems of tomorrow will create a viewing experience that isn’t just free from buffering but is also incredibly efficient and tailored to the moment.

Common Questions About Adaptive Bitrate Streaming

Even after you get the basic concept of adaptive bitrate streaming, a few practical questions almost always pop up. It’s a technology that touches everything from the video player on a phone to the global networks that deliver the content, so it’s natural to wonder how it all fits together.

Let’s clear up a few of the most common questions to help you get a better handle on how ABR works in the real world.

What Is the Main Difference Between Adaptive and Multi-Bitrate Streaming?

This is a big one, because on the surface, they sound almost identical. Both involve making multiple versions of a video at different quality levels. The real difference comes down to one simple thing: who’s in charge of the experience.

With old-school multi-bitrate streaming, the viewer is in the driver’s seat. You’ve seen this before—it’s the little gear icon that lets you manually pick “480p,” “720p,” or “1080p.” It puts the burden on you to guess what your internet connection can handle. Guess wrong, and hello, buffering wheel.

Adaptive bitrate (ABR) streaming flips the script. It’s a smart, automated system where the video player itself makes the decisions. It’s constantly monitoring your network speed in real-time and picking the best possible quality from moment to moment. It’s this hands-off, dynamic adjustment that makes modern streaming feel so smooth.

Multi-bitrate streaming gives the viewer options; adaptive bitrate streaming makes the decisions. That automation is the secret sauce that kills buffering and keeps people watching.

How Does ABR Affect Video Latency in Live Streams?

While ABR is a huge win for a smooth, buffer-free stream, it does come with a trade-off: a bit of a delay, or latency, especially in live events. This happens because the whole process isn’t instant. A few crucial steps have to happen behind the scenes, and each one adds a few precious seconds.

Here’s a quick look at where that delay comes from:

- Encoding and Segmenting: The live feed has to be processed into all the different quality levels and then chopped up into small, manageable chunks.

- Manifest Creation: A playlist file, or manifest, has to be constantly updated to tell the player where to find the newest chunks.

- Player Buffering: To guard against network stumbles, the player always downloads several chunks ahead of what you’re currently watching, creating a time buffer.

Each of these steps adds to the gap between something happening live and you seeing it on your screen. While newer tech like Low-Latency HLS is shrinking this gap significantly, there’s often a direct trade-off to consider: more stability from ABR can sometimes mean a little more latency.

Does Using a CDN Help with Adaptive Bitrate Streaming?

Absolutely. You could even argue that a Content Delivery Network (CDN) is an essential partner for doing ABR right, especially at scale. A CDN is just a big network of servers spread all over the world, with the simple goal of storing content physically closer to your viewers.

That proximity makes a massive difference for ABR. When the video chunks only have to travel a few miles from a local server instead of halfway across the country, the connection is naturally faster and more reliable.

A stable, speedy connection gives the ABR player the confidence it needs to make better decisions. It can stick with higher-quality streams for longer and is far less likely to downgrade because of a random network hiccup. In short, a CDN creates the perfect playing field for ABR to shine, delivering a flawless experience to every single viewer.

Ready to stop worrying about infrastructure and start building amazing video experiences? LiveAPI provides developers with a powerful and easy-to-use platform for live and on-demand streaming, complete with robust adaptive bitrate capabilities. Integrate high-quality video into your application today by visiting https://liveapi.com.

![Best Live Streaming APIs: The Developer’s Guide to Choosing the Right Video Streaming Infrastructure [2026]](https://liveapi.com/blog/wp-content/uploads/2026/01/Video-API.jpg)